What Is Affective Computing?

6 min readAffective Computing is “computing that relates to, arises from, or deliberately influences emotion or other affective phenomena”(Picard, 2000). This research field concentrates on developing computer systems that could receive and recognize the user’s feelings, and ultimately experience and express their own set of feelings, for the benefit of a better and more natural Human-Computer interaction.

This is a new field of research first backed and promoted by the MIT, under the leadership of Rosalind Picard (MIT). She leads the MIT’s Affective Computing Lab Research since its creation in 1997 (MIT, 2018). She praises that computers should be able to distinguish and express human emotions in order to be qualified of “genuinely intelligent” entities (Picard, 2000). She appears in no less than 310 publications in that field since 1997 (MIT, 2018).

Affective Computer Significance as an Emerging Technology

This technology is exciting because it involves a wide range of skills in the areas of computer science, psychology, neuroscience, healthcare, communication, mathematics, statistics, etc. Hence, before being able to design any system, emotions and their expression must be defined and categorized. Therefore, the Hidden Markov Model (HMM) is a statistical tool well designed for the purpose of detecting emotions. By modeling a system with a finite set of internal states that are hidden to the user, predictions are made based on observations, to infer the current state (Picard, 2000).

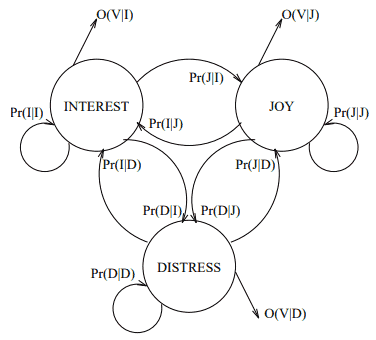

Below is a HMM example with three mind states (Interest, Distress, or Joy):

The Hidden Markov Model shown here characterizes probabilities of transitions among three “hidden” states, (I,D,J), as well as probabilities of observations (measurable essentic forms, such as features of voice inflection, V) given a state (Picard, 1995).

This complex statistical model is being applied for pattern recognitions, economics, biosciences, climatology, and Machine Learning indeed (WojciechPieczynski, 2007).

The growing interests for these promising applications is driving interest for Affective Computing. Applications such as virtual reality, smart surveillance, or perceptual interface (Picard, Tao, & Tan, 2005) are definitely the beneficiaries of this research. Humans communicate a wide range of emotions, that are part of the message when they communicate. Emotional awareness and empathy are powerful communication skills that are proven to create “safe, functional, and relieving relations” (Ioannidou & Konstantikaki, 2008) between those who demonstrate Emotional Intelligence. On the other hand, mastering these skills can also help manipulate and shape the mind of an audience. This part is lost with our current level of computer interaction: keyboards and mice are pretty limited sensors.

Emotions also play a major role in the learning process: this model is called reinforcement learning or Brain Emotional Learning (BEL) (Liu et al., 2018). We tend to remember better when we are emotionally involved during the process: interest, love, hate, or even fear have a strong link in memorization mechanisms. Who doesn’t remember its childhood accidents as clearly as if they happened yesterday? Detecting emotions such as the frustration of a student can help intelligent systems to initiate a feedback interaction (Kapoor, Burleson, & Picard, 2007). Detecting such emotions has been studied by Kapoor with an intelligent tutoring application that involved “non-verbal channels carrying affective cues” (Kapoor et al., 2007). This study illustrates the complexity of such implementations because it involves complex detection algorithms, fit to an emotional model to be defined, and associated with a set of carefully chosen physical sensors.

Real Life Potentials

Real life applications of affective computing are not limited to Machine Learning and Artificial Intelligence. With the development of behavioral and emotional models, has come the emergence of new ideas and applications for technological devices to detect human emotions. These developments have led researchers to investigate the potential benefits for healthcare and mental conditions such as Autism. Affective wearable devices that “reports on social-emotional information in real-time to assist individuals with autism” (El Kaliouby, Teeters, & Picard, 2006) have been studied and demonstrated the benefits of affective computing in assisting individuals who have autism, later confirmed by another MIT study (Hoque, 2008). This is why this field of study has gained momentum in recent years.

Autism Study

Children with Autism Spectrum Disorder (ASD) are a challenge for therapists, and a 2008 study conducted in the Vanderbilt University aimed to develop “understanding” interactive technologies for a potential use in future technology-assisted ASD intervention (Conn, Liu, Sarkar, Stone, & Warren, 2008). The first goal was to employ human-computer interaction (HCI) and human-robot interaction (HRI) technologies as “interactive therapeutic tools” to “partially automate time-consuming, routine behavioral therapy sessions” (Conn et al., 2008). The second goal was to explore new ways to produce a better economical modeling by reducing the number of sensors and achieving better inferences.

The machine learning model they used is called Support Vector Machines (SVM). It’s a complex, supervised learning model that involves polynomial input transformations algorithms that help construct a “machine” decision within a “very high-dimension feature space” (Cortes & Vapnik, 1995).

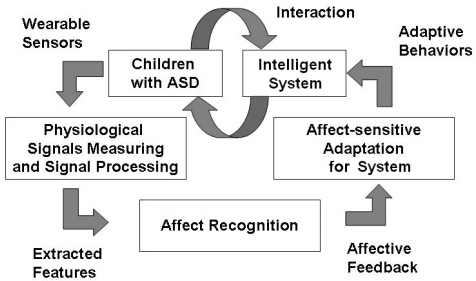

The framework of interactions they developed around the subjects and the machines is schematized as below:

Given the data inputs from the children sensors, an intelligent system decides the next course of actions, which behavior influences the children engaged with the system, in a loop cycle (Conn et al., 2008). The setup included 15 years old participants, an experimenter the interacted with the system, and a therapist and the parent as hidden observers. Children’s cognitive tasks included solving anagrams and playing a Pong game. The set of bio sensors involved was intimidating, below is a short list:

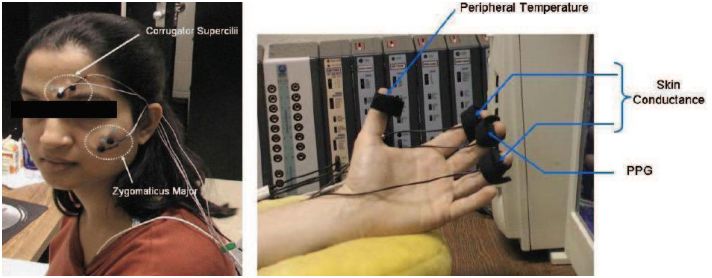

- a mic to detect heart beats

- hand electrodes to detect body temperature, skin conductance (sweat), and PPG (photoplethysmography, to sense the rate of blood flow)

- electromyogram (EMG) with facial electrodes to detect facial expressions (smile, frown, blink rate…)

Signal processing included “Fourier transformations, wavelet transform, thresholding, and peak detection” (Conn et al., 2008) and these correlations were used to compute an Affective Modeling. Their SVM based affective model “yielded approximately 82.9% success for predicting affect inferred from a therapist” (Conn et al., 2008). Liking level predicted in real time reached an 81.1% accuracy.

Conclusions

Although these results are encouraging, they showed some limits such as the necessity to wear physiological sensors, which is highly restrictive in a real life application. The fact that modeling emotions is sensitive to the model developed also influenced the prediction successes. The researchers insisted on the necessity to improve the algorithms to make the best use of the limited set of sensors that should be required in real life (sound and visual). Their future investigations would have been in the development of an interactive face recognition software, that could also convey facial emotions via a life-like face 3D model. Non-intrusive sensors such as visual face recognition are at the heart of today’s researches.